A Melange of links & thoughts

Welcome to this month’s collection of randomness. I dropped the links up front today because, well, the other part might be a bit tedious to many people (maybe all people). So we’ll serve dessert first…and there are some fun links in there.

Let’s get into it.

Links: Depth cut edition

A few links that have been hanging out on my “to share” list for a long time, and I’m not sure that there’s anything that unites them except that if you talked to me the week after I first read them there’s a good chance I talked about them.

- Whenever McSweeneys satirizes American higher education, it’s always clever but this one is both spot on and not so dissimilar from actual thoughts I have about the kind of education my children might need for the dystopian future.

- A surprisingly entertaining look at how the uber rich actually go about buying that rarest of assets - top tier professional sports teams…but also, a reductio ad absurdum of the desire to turn everything into an asset class and how it denatures our world.

- There was a period in my lifetime when Los Angeles averaged one bank robbery every 45 minutes for a year. This is a remarkably detailed rundown of how the number of bank robberies snowballed, and why Los Angeles was particularly vulnerable. Cue up some Death Cab For Cutie…

Atlas Obscura has two articles about food service in Antarctica that I found fascinating for their consideration of how something so inherent as eating and drinking looks different under such extreme conditions. What it’s like to be a chef in Antarctica, and what it’s like to be a bartender in Antarctica

Just a periodic reminder of the power of collective action mixed with a little creative thinking. Treat all of your service workers with dignity and kindness folks.

Erykah Badu was one of my favorite artists back in the day, and the she just dropped off of my radar. So I was very happy to read this and see that she has chosen to make the work that she wants to make - and has had success following that path - even though it isn’t the most lucrative approach.

Running LLMs locally

In December, the time came for me to upgrade my computer, and I decided to get a GPU that’s beyond what I actually need for my typical day to day usage because it would allow me to do a bit more experimentation with locally hosting LLMs (and maybe eventually large agent models - LAMs - too).

My theory here is that anything that I can do on a prosumer machine right now is something that anyone will be able to do on a mobile device within about 3 years. As the year goes on, I plan to go a bit more in depth about why I think there’s a lot of merit in locally run & open-sourced AI models - both practically and philsophically. For today, I just want to do a bare bones look at what I’m running, what I’m doing with them, and the benefits & tradeoffs compared to proprietary, cloud-based options.

For now, I’ve focused on very mainstream use cases - the kind of things that I think most people would do as part of normal work flows: querying information, generating text, voice transcription, and image generation. I’m keeping the software stack pretty minimal for now and really focused around 3 pieces of software each running a different locally hosted model:

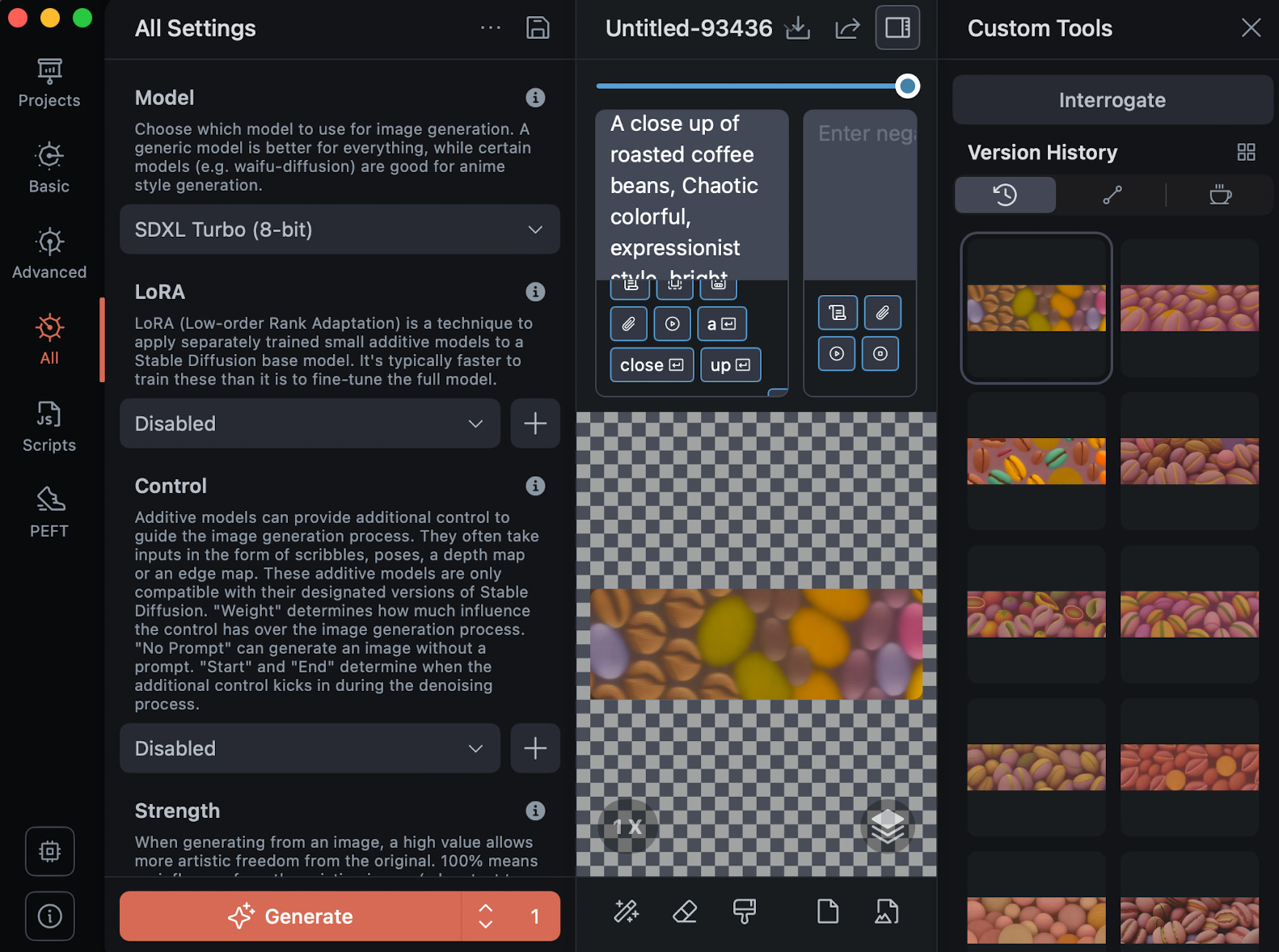

- Draw Things (model: Stable Diffusion Turbo) - this is my image generation suite. The biggest difference between running locally and through a cloud service like Dall-E or Midjourney is the speed of generation. Simply put, running it locally is faster. The secondary piece is that running locally and not having to pay attention to token usage in something like Midjourney lets me go through a lot of iterations of things very quickly. The tertiary consideration - one that may not be valuable for most users - is fine grained control. I had been using DiffusionBee to run a simpler version of Stable Diffusion on my previous device, but Draw Things provides even more control to the user with the tradeoff of a not particularly intuitive interface. The popular cloud-hosted options right now would be Dall-E or Midjourney, and in both cases the primary control the user has is around the prompt engineering. Draw Things gives me different level of guidance, different numbers of steps, and different combinations of models for main image generation, sampling, and refinement.

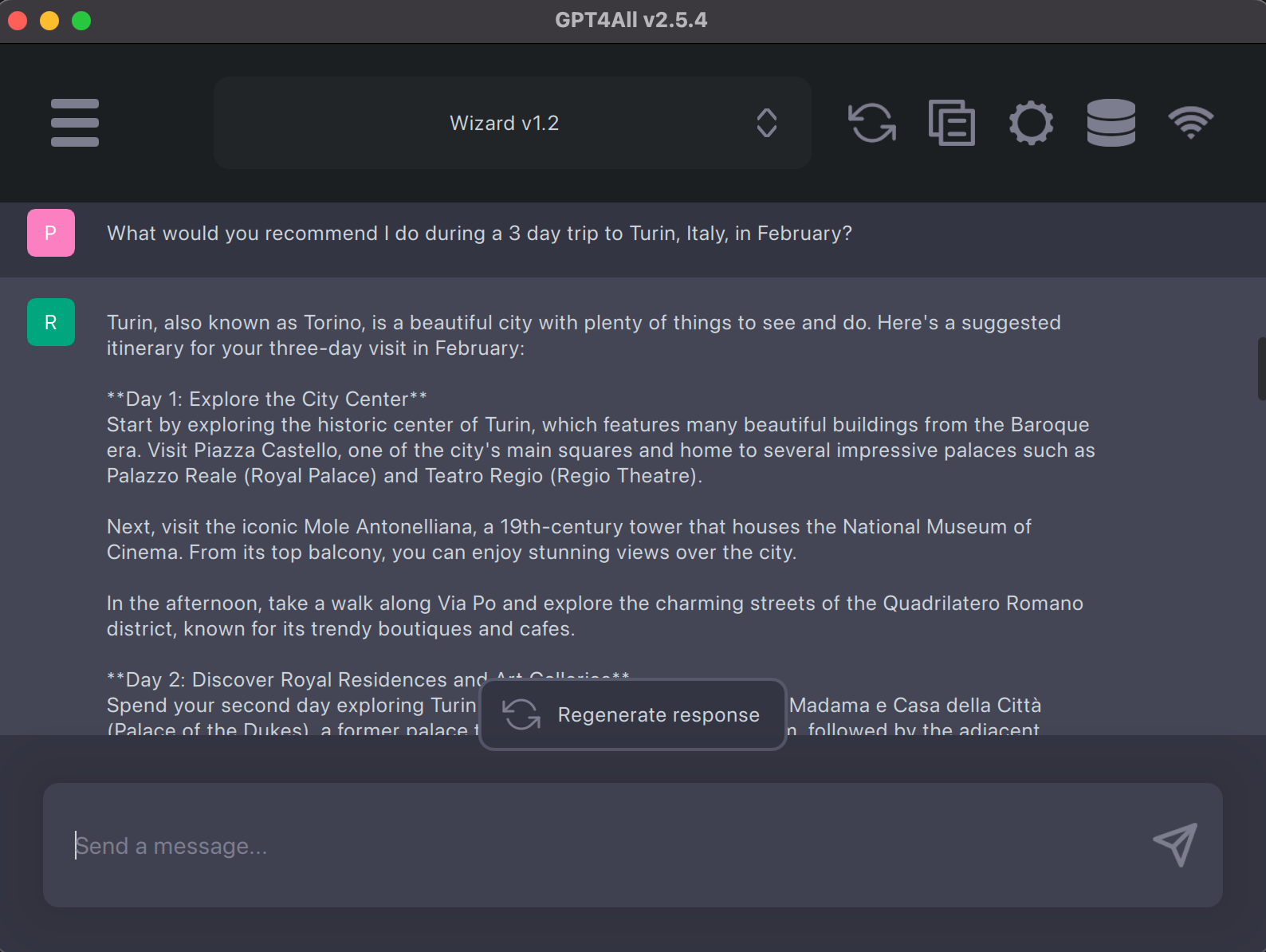

- GPT4All (model: WizardLM/Llama) - this is what I’m using for query & text generation. Again, compared to using a cloud-based solution like ChatGPT, running local with a 14 core GPU means that the responses I get are almost instantaneous and speed won’t get throttled if the OpenAI servers are getting overloaded (though this seems like it’s been less of a problem lately). The other fringe benefit is that my ability to run an LLM isn’t dependent on my network connection…useful if you’re on a flight or a train with spotty WiFi. The GPT4All software also lets you choose from many different LLMs that you want to download and run, so you’re not beholden to any single model. The tradeoff is that you may not be running the most up-to-date most optimized model.

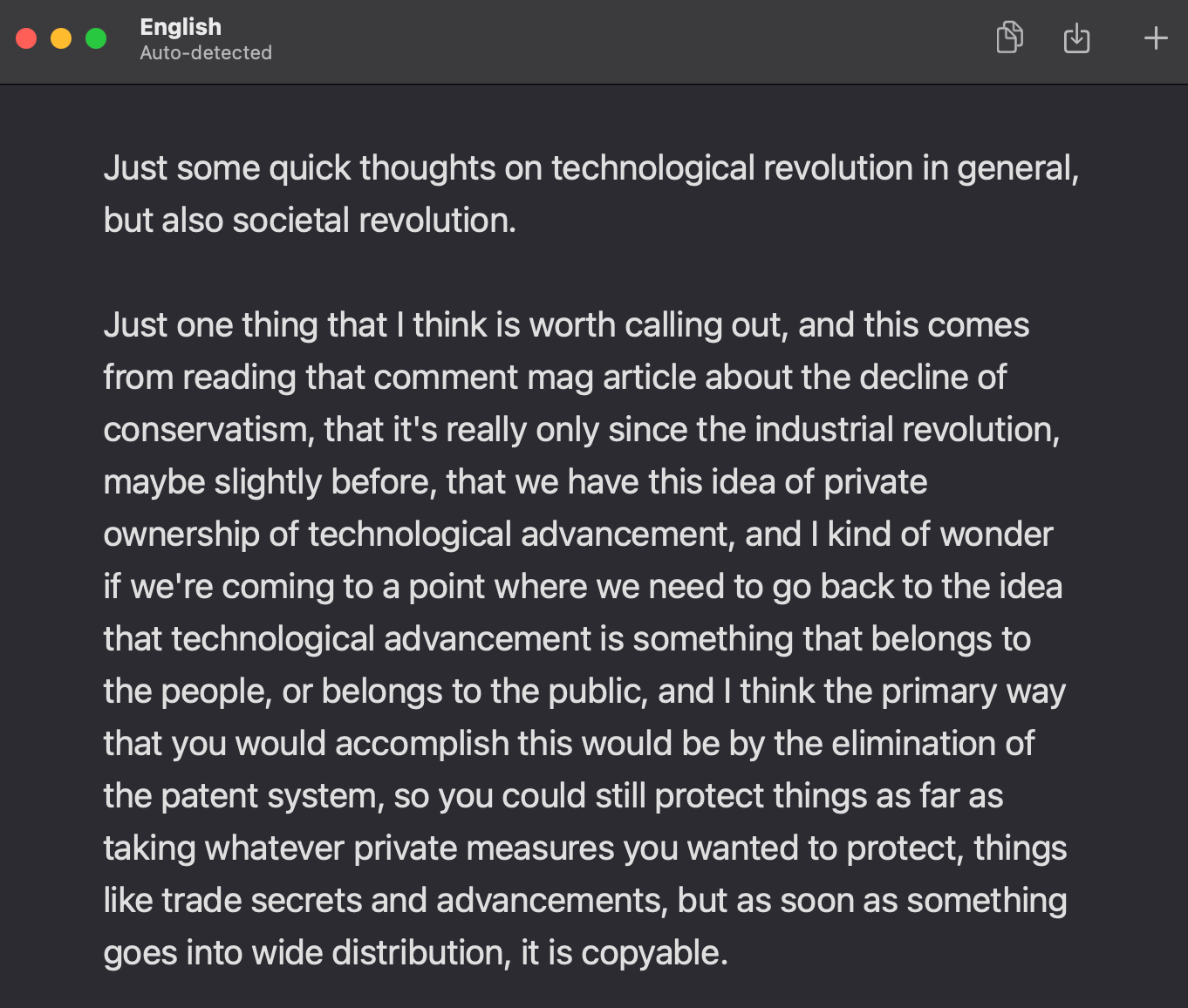

- Aiko (model: Whisper) - ostensibly, Aiko does live transcription. In reality, I’ve found it to be pretty lacking on that front - especially compared to the dictation feature baked into macOS, which is nearly instantaneous and error free. On the other hand, if you want to transcribe a recording of yourself speaking, Aiko is pretty great and really fast, generating an error free transcript in about a fifth of the recording time. It falls short compared to cloud-based options when it comes to multi-party transcriptions where it recognizes and identifies different voices. Of the 3, it’s the one I use the least - almost always just to transcribe voice memos I make for myself. Honestly, if I was running an Android with the TensorFlow optimized chips, I could probably do all of that on my mobile device and would never use something like Aiko in the first place. Still, there’s something to be said for a lightweight, single purpose application.

None of this is particularly mind-blowing, but I think that’s what makes it compelling; it’s not long before we’ll take it for granted that we can do all of these things with 0 latency no matter where in the world we are, using a device that we carry in our pockets. These is a somewhat boring reality that enables a lot of very interesting possibilities.

But we’ll save that for another day.