Subverting intent

Ok, so, yeah I’ve been writing about generative AI a decent amount lately, but that obscures a little bit of a secret about me, which is that I’m rarely enthusiastic about new tech and much more often into finding the unexpected possibilities in old tech and boring tech. For this latest edition of the old newsletter, there are a few threads that I want to weave together related to pretty conventional technological affordances and how they often help us subvert the intended purpose for which the technology was originally designed. Fair warning: this one is a bit long. You’d be well within your rights to scroll through to look at the headings, look at the pictures, and not necessarily read every single word…but I’ve tried to keep it interesting.

Why don’t we start with…

Another chapter in the adventures of a charmed idiot

Sometimes a friend says, “Hey, I’m going to be in [place reasonably proximate to me] for a few days. You should come by.” And I have a small list of friends for whom, when they say this, I want to do everything I can to say, “OK, sure!”

And that is how I ended up co-presenting with two friends at a workshop on the implications of AI for the future of design education at the Design Museum in Munich, Germany. Don’t worry - this isn’t going to be a rehash of an event that was really more of a “guess you had to be there.”

In the course of this workshop, I said something out loud, on mic, from the stage, and the next day one of my friends told me that she disagreed with what I said, so we had a really good dialogue about it which I’ve continued thinking about since. Here’s the thing I said:

Tools are morally neutral. How we use the tool is what gives the tool a moral charge in one direction or another.

As we discussed it during a break in the workshop, a few other people listened in & quite a few of them also weighed in on her side. A general rule that I have found to be wise guidance is this: when many people who you respect and who are almost certainly smarter than you disagree with you, their perspective probably has at least some amount of merit to it - so you might want to interrogate your perspective.

With that in mind, allow me a brief but hopefully relevant digression about dogs. You may be aware that there are many breeds of dogs. Pure breed dogs come from a lineage that was cultivated for a specific purpose - working dogs, shepherding dogs, hunting dogs, and so on. Consequently, each dog from these breeds is an expression of a specific set of values and commitments of the humans who bred, raised, and employed these dogs for that purpose. Taken over multiple generations, they refined and optimized these breeds toward that intended purpose. That doesn’t mean that I couldn’t, for example, train a shepherding dog to fetch bird carcasses. It would just take more work than if I trained a bird dog. The categorical classification of the dog breeds is meaningful, but it doesn’t prohibit an individual dog from being able to do things outside of its categorical classification.

And this is where I think I was wrong to say that tools are morally neutral. Even more than dogs, tools were created by people for specific purposes that are also an expression of a set of values and commitments. Some of those values and commitments are relatively weak and the tools they are associated with are sufficiently general purpose that they can be used in a variety of ways that can override the initial moral state. That can look a lot like moral neutrality. To return to the dogs: Lady, she of “& the Tramp” fame - she could go either way.

But some tools have a strong expression of values and commitments, such that trying to utilize them for an opposed purpose involves a lot of difficulty. I think it’s reasonable to say that the stronger the initial moral state of a tool, the more difficult it is to change its intent. To put it in dog speak, it’s not that a golden retriever could never viciously attack a child…but it would take a pretty complex chain of events for that to happen1.

So, I think I want to restate my position:

Tools are not inherently virtuous or malicious, but to use them toward a specific purpose the user has to understand the extent to which they have to subvert the tool’s intended purpose.

Yes, for example, you can take that technology that was used to create a super weapon and use it as an energy source, but it’s a pretty heavy undertaking.

I reserve the right to continue evolving my thinking on this point…especially if Savannah keeps telling me I’ve got it all wrong2.

Flowing from that, 2 examples of some things I’ve been doing recently to subvert the intended purpose of some tools. My general approach in all of this is that I want to see how much I can use off the shelf, readily available tools and make them work in combination to do something a bit differently. Nothing you’re going to see below is all that revolutionary3 .

Reclaiming my attention

I recently saw an app lets you choose apps on your phone and makes you do a 10 second breathing exercise before you can open those apps. This is a total Skinner box of behavioral modification…but I don’t disagree with its intent, because I think it probably both conditions a person to not open those apps as much and to access their cerebral cortex when they do. It could be an effective counter to all of the various BJ Fogg tools of technological persuasion that are baked into engagement-maximizing algorithms.

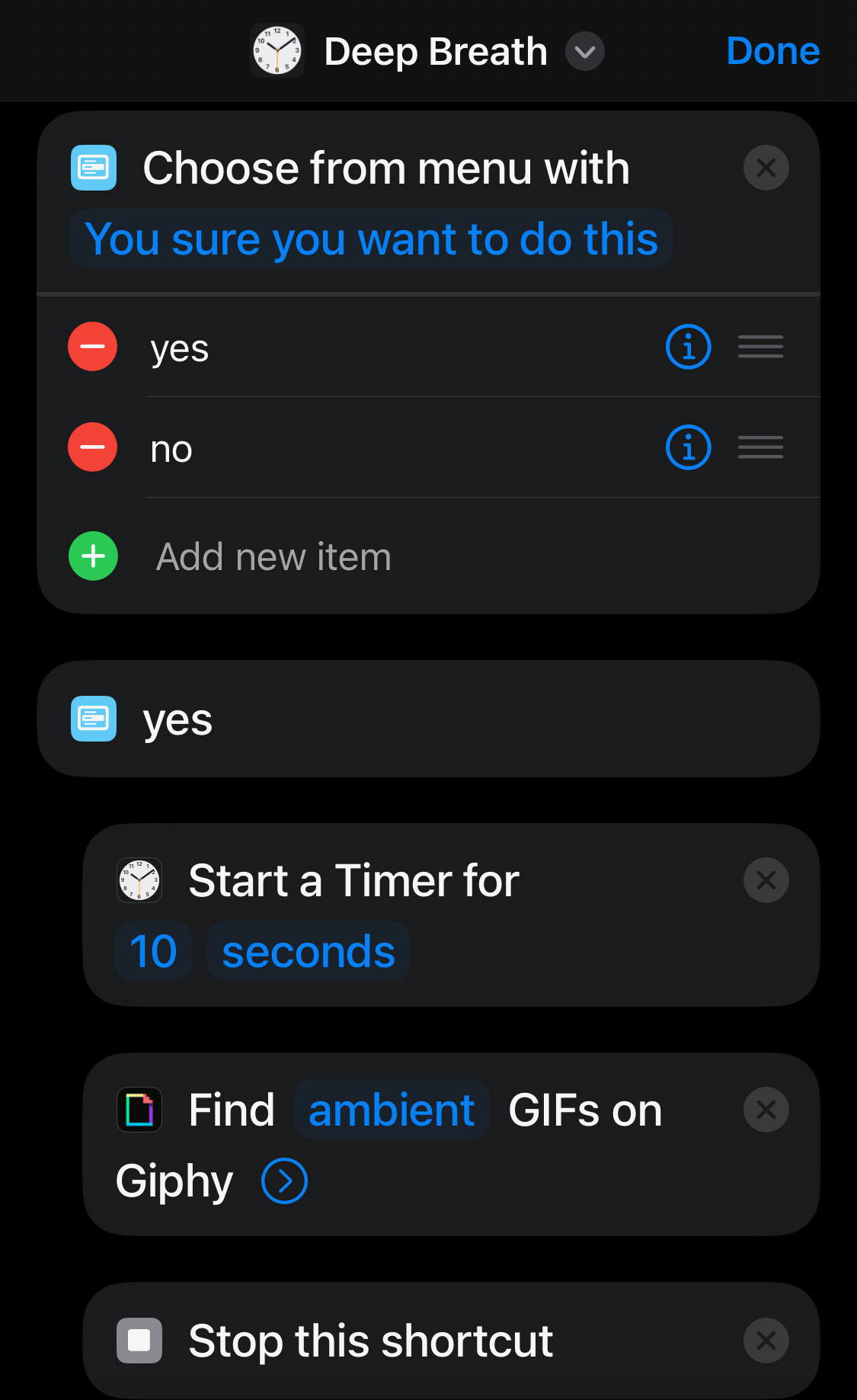

Rather than using the app, though, I thought I’d see if I could build it myself using Apple Shortcuts. It turns out that it’s not very hard to make a rough version of this:

I now have this in front of nearly every app that I find myself mindlessly switching between when my executive functioning is fried. After a few weeks, I find that I open them less and spend less time in them when I do open them.

The downfall of the Skinner box, though, is that it’s efficacy diminishes over time if it doesn’t diversify the feedback mechanism. But also a Skinner box usually requires the person to cede control to it. This shortcut isn’t exactly a Skinner box, because I can always just ignore it. If I want to immediately swipe out of the image overlay and turn off the countdown timer, I can - and sometimes I do! But it acts as a reminder to me of how I want to use my phone and how I want to spend my attention. If you wanted to do the same, you could build it yourself in like 5 minutes, but you can also grab my version of it here…or you could buy the one sec app.

In pursuit of the omni device

I drafted this section in a cafe on a Macbook while my iPad sat in my backpack on the floor next to me and my iPhone lay facedown on the table. That’s 3 devices that have a significant amount of functional overlap - not perfect overlap, to be sure, but the more I think about it I don’t have a ton of use cases that desperately need the compute power of my laptop. And that has got me thinking: why couldn’t I have a single omni-use device? Partially this is driven by the idea of running an LLM locally on my phone, and the idea that there’s a probably version of the future where I’m running a more agentic model that is quick and accurate in its responses and actions. We don’t live in that future quite yet, but I’ve been trying to think of the more compute-intensive use cases that I don’t use my phone for, and in my case it comes down almost entirely to editing media (video & audio), most things that involve visual design, the extremely limited instances in which I’m in an IDE, and anything for which I’d use a local LLM.

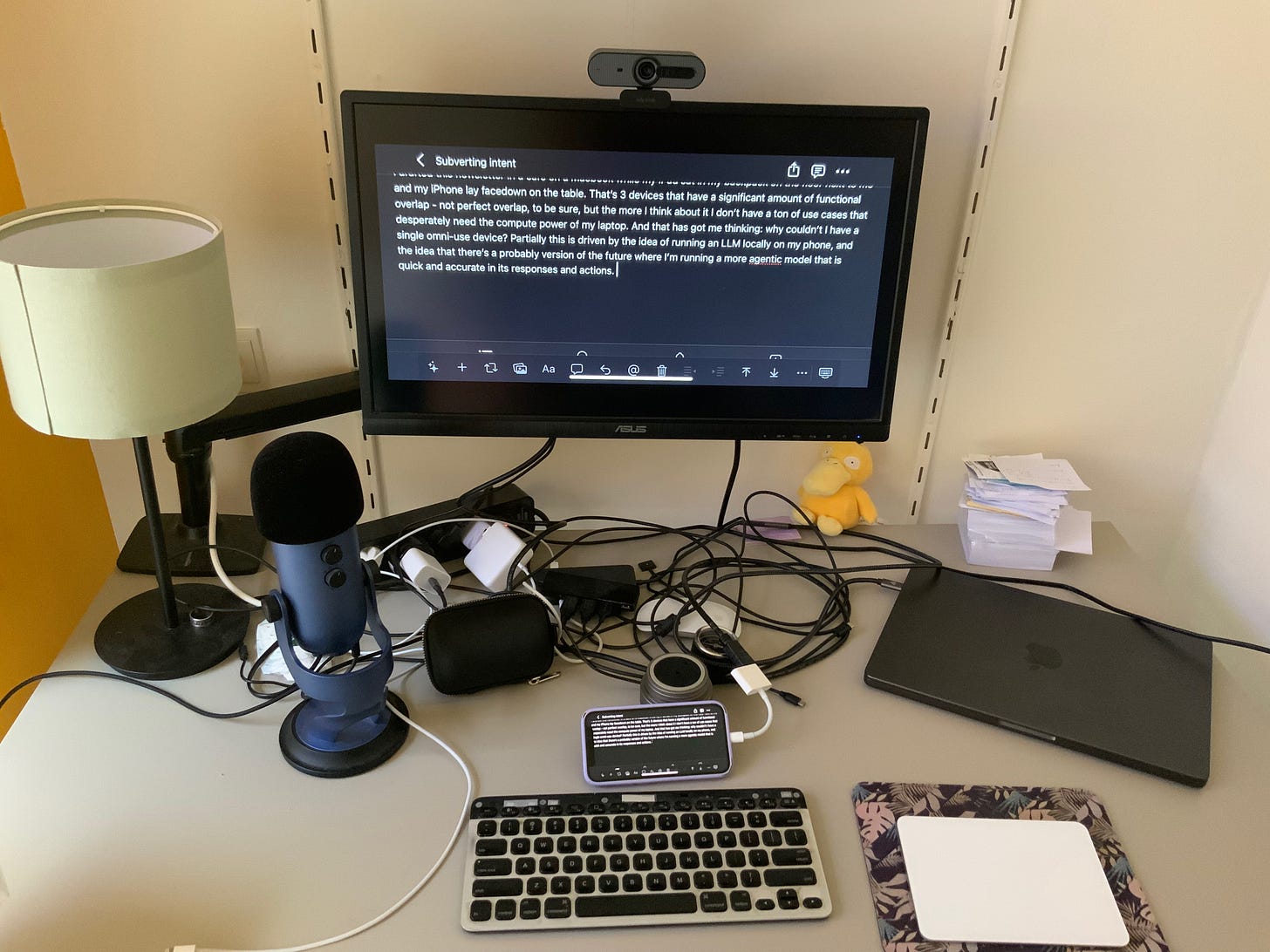

I recently bought a $20 HDMI/Lightning dock connector that lets me hook my phone up to a monitor and keyboard as a way of exploring how these use cases would work on an omni-device4, and at first I was considering an in depth write up of each use case and what tradeoffs were involved. It turns out, though, that it’s all kind of the same thing and it boils down to just a few points:

- It’s the software, not the hardware. This one is probably pretty obvious? It’s mostly just Moore’s Law stuff: very little of what we do is work that has become substantially more computationally intense over the last 4 or 5 years, and the computers we were using 4 or 5 years ago are less powerful than the latest generation of phones we carry in our pockets. The compute power of our phones is up to the task for probably 95% of use cases, and for a lot of people I bet that number is 100.

- It’s interoperability of peripherals. You can see in the photo that I have a standalone trackpad that I use with my computer (and, indeed, with my iPad). It just doesn’t work with the phone. Configuring most external peripherals to work with the phone is a little bit of a chore, certainly more than with the computer. But see the first bullet - that’s not actually a technical limitation in the hardware; it’s a software thing. I’m old enough to remember when that was a reality for desktop computers too, but we’ve developed libraries for interoperability that usually make it pretty trivial5.

- It’s a different mental model of interaction6. Using the keyboard as my primary input with the phone has required learning some new shortcuts and commands; using it without a mouse was something I anticipated being more difficult, and it was at first…until I remembered that I could still use touch input on the screen just like I do when I use it as my phone. And, strangely, that works better than having a big touch screen where you get the whole Gorilla Arms phenomenon if you try and use the touch function too much. The phone itself acts a bit more like Apple’s ill-fated Touch Bar, except a lot more capable and familiar. Still, there’s no denying that it’s a kind of Frankenstein approach to interaction design. It’s still a software thing, though! If a company cared enough to optimize this model, there’s a way that it could work…but I’m not sure it would ever have mass appeal.

Listen, I’m not the United States Department of Justice - I’m not coming for Apple because they’ve locked me into this device ecosystem. I understand that they’re making choices on all of the above points and have decided - at least in part because their business model is largely based on hardware sales - to choose one approach versus the other. But I think I’m also not done with this experiment. Using my phone as my primary desktop computer has been less of a hindrance than I had initially anticipated, so I think I want to look into the next level, which I think is how I could use it to take the place of my laptop when I’m on the go. The phone is already designed to be a mobile device, and I’ve established that I can get it to work decently well with an external monitor, so now I want to explore if I can hack together a form factor that is easy enough to carry with me and provides a monitor and battery that I can connect to anywhere and use my phone like a laptop…watch this space?

And, yes, if you Google it, you can find some examples.

And Annette…and Madeleine…and Regina.

Or all that difficult.

And, in fact, am writing this section right now within that setup

Unless we’re talking about printers, which is just an endless hellscape…one which is a consequence of corporate choice, though, not technical limitation.

And, therefore, interaction design.